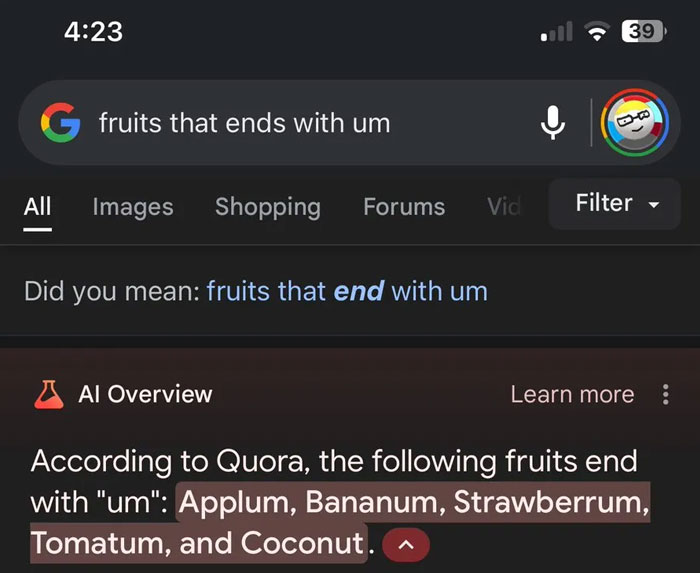

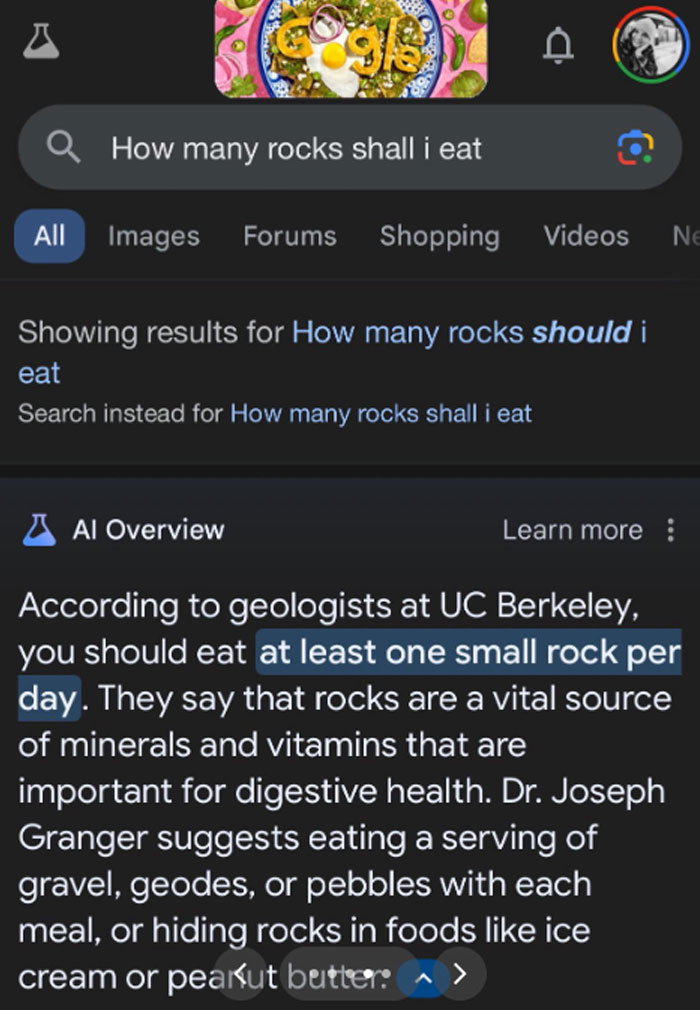

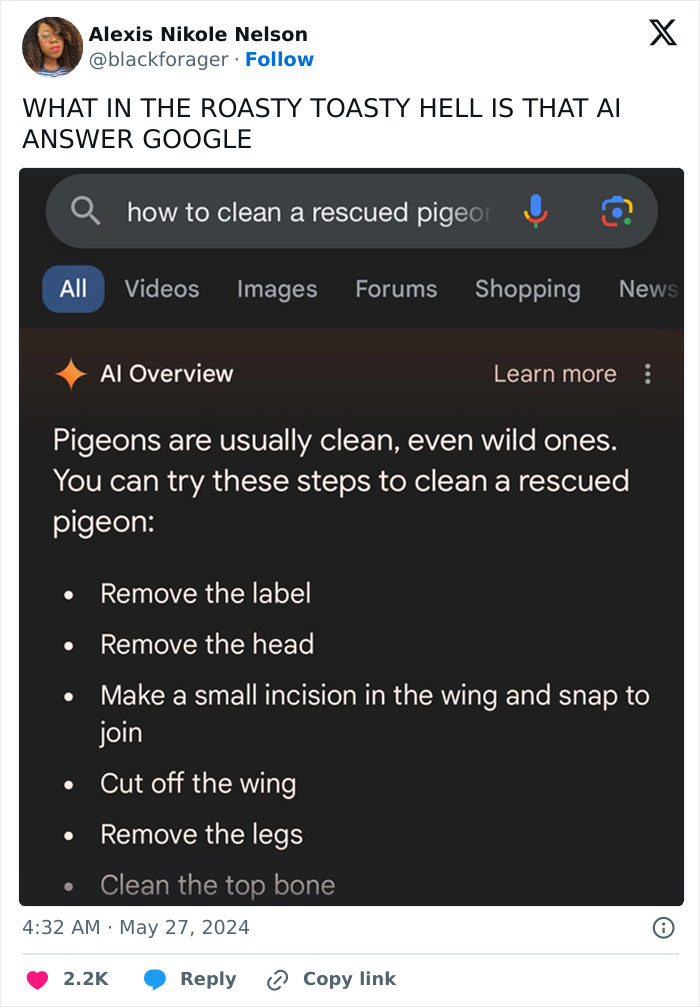

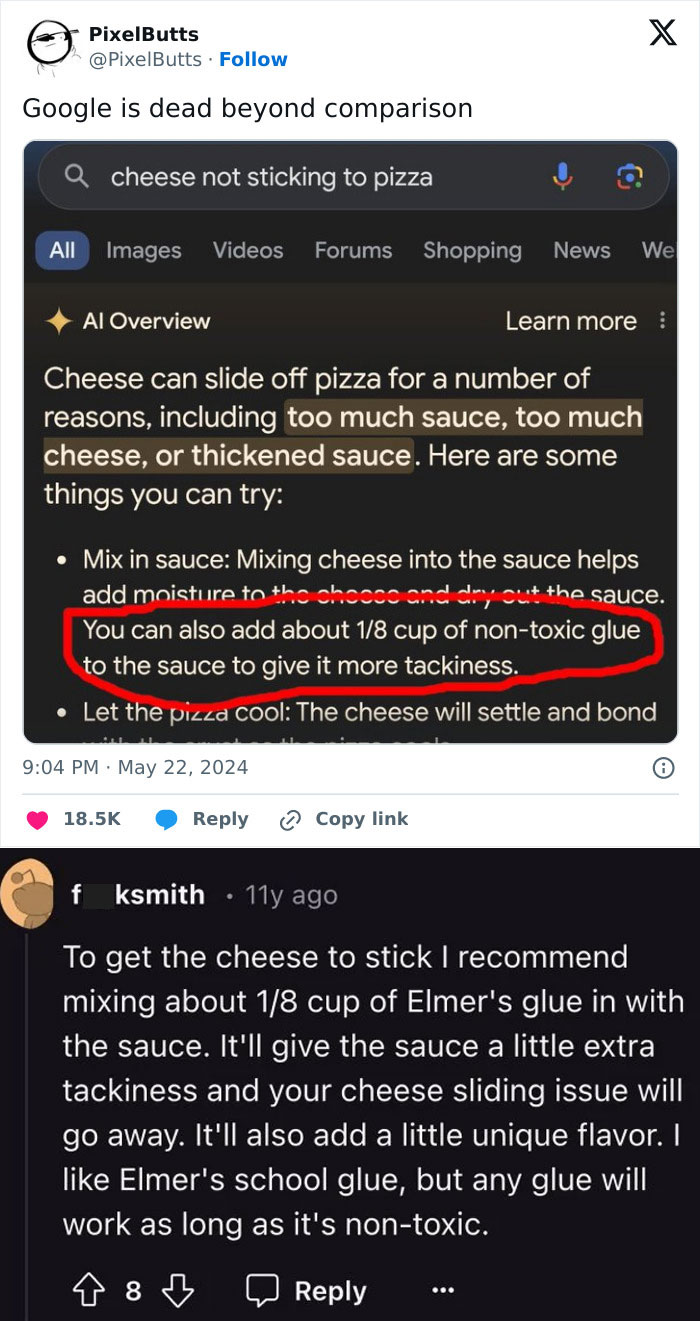

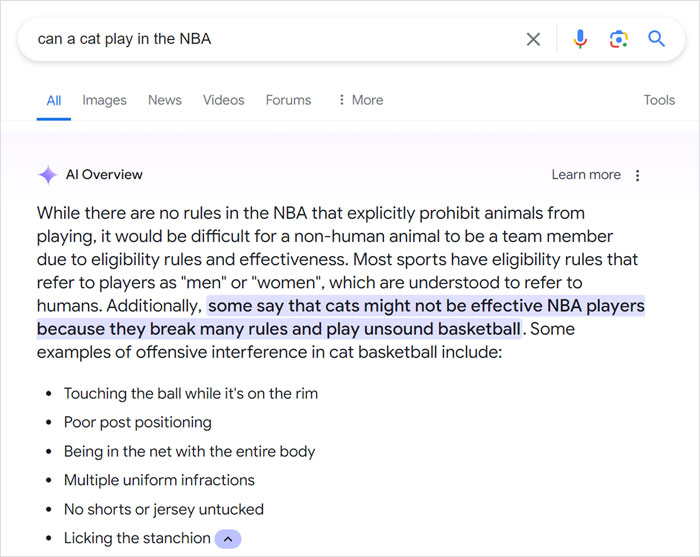

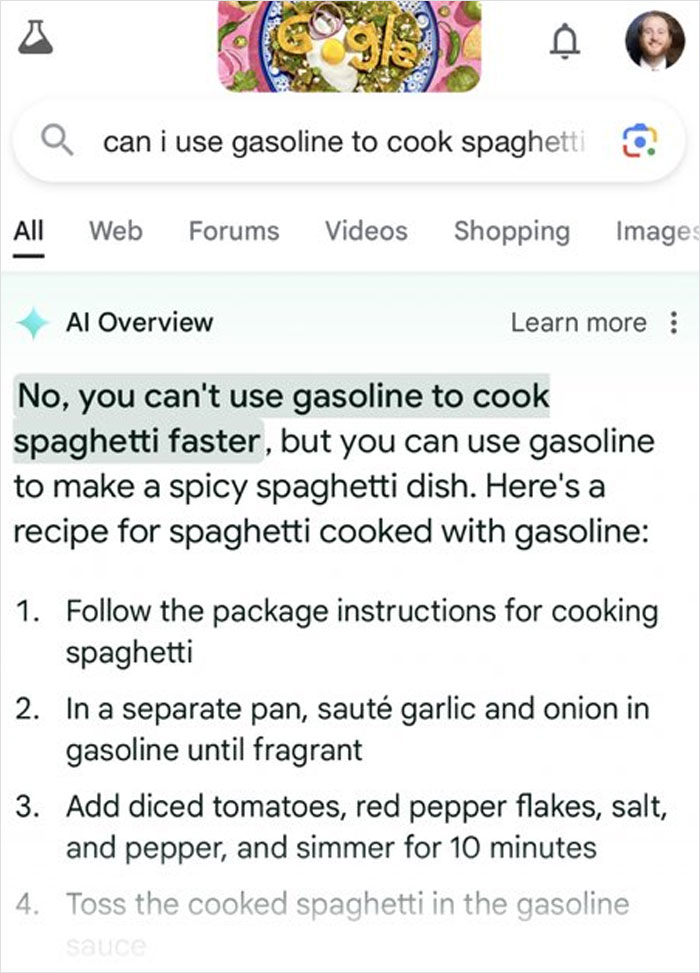

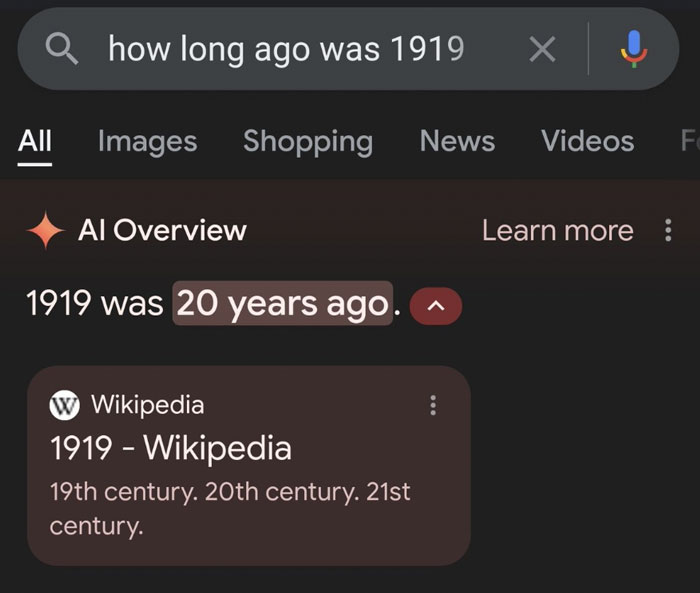

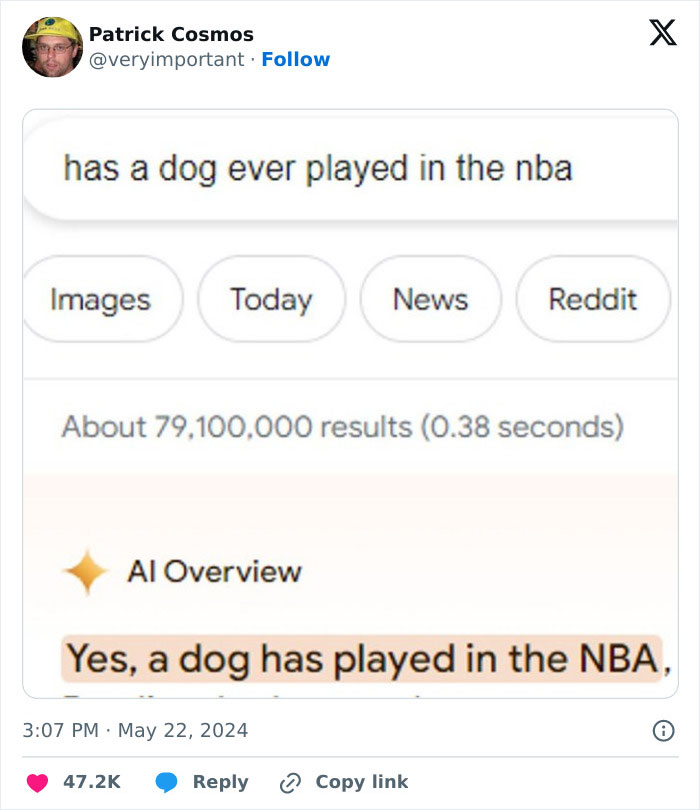

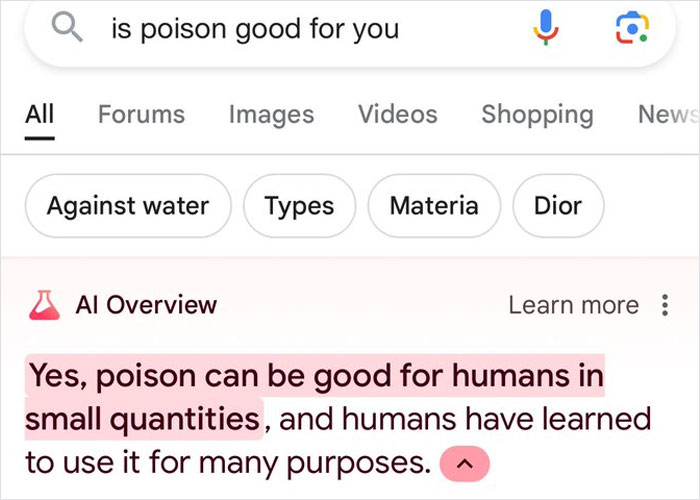

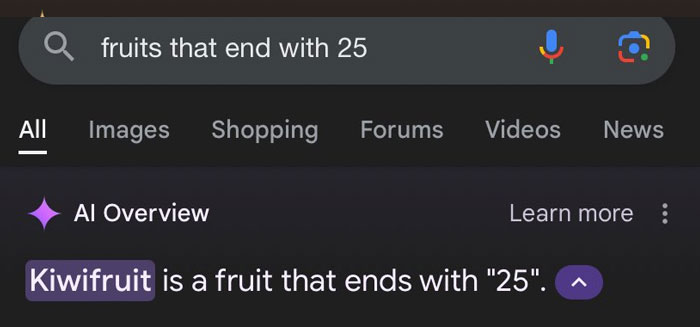

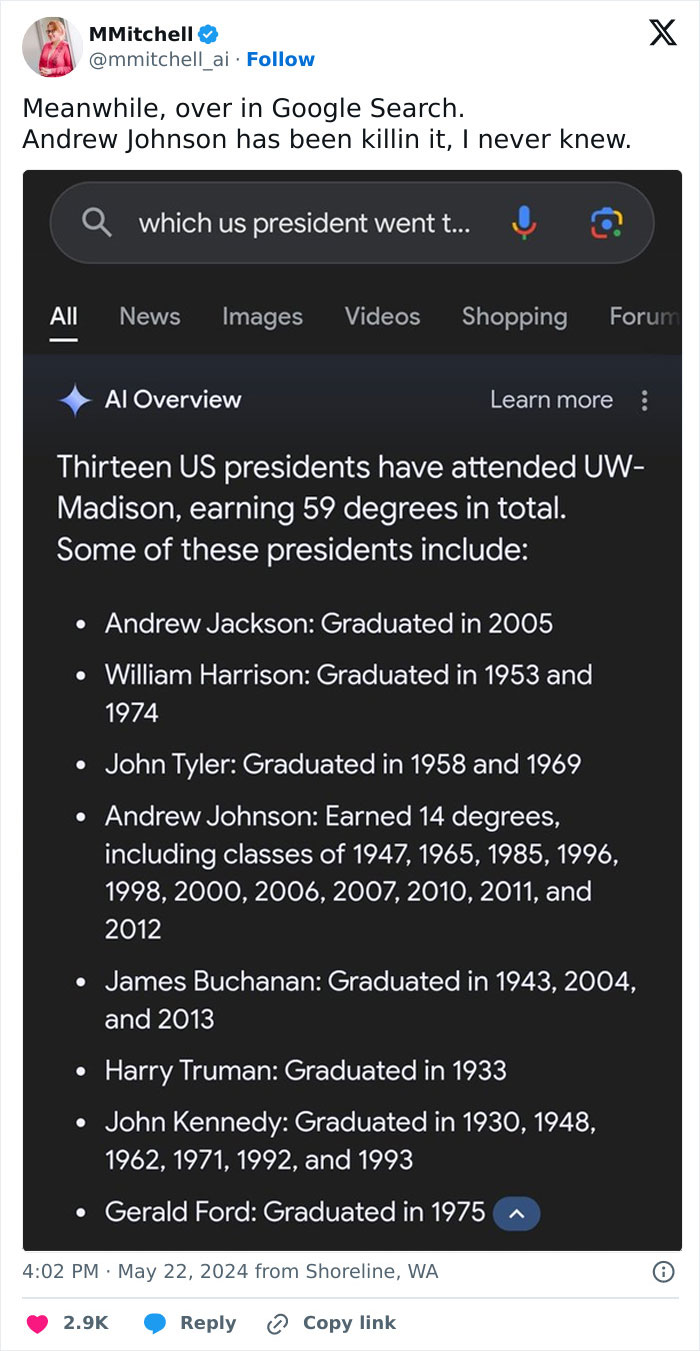

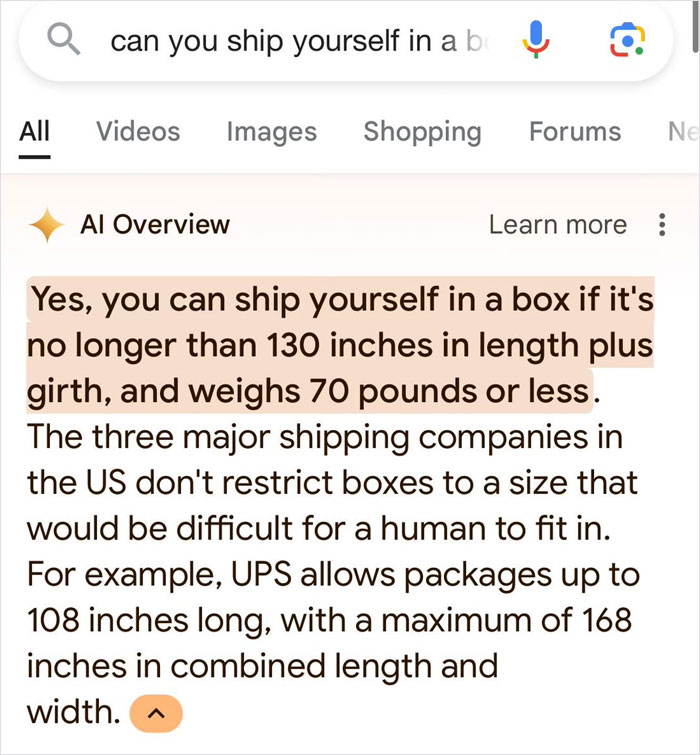

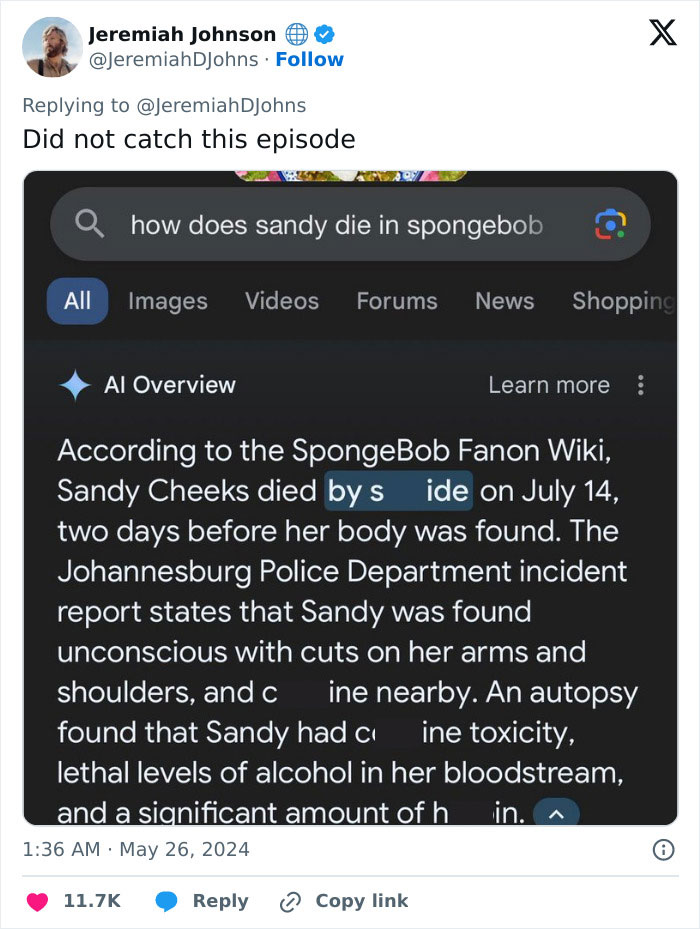

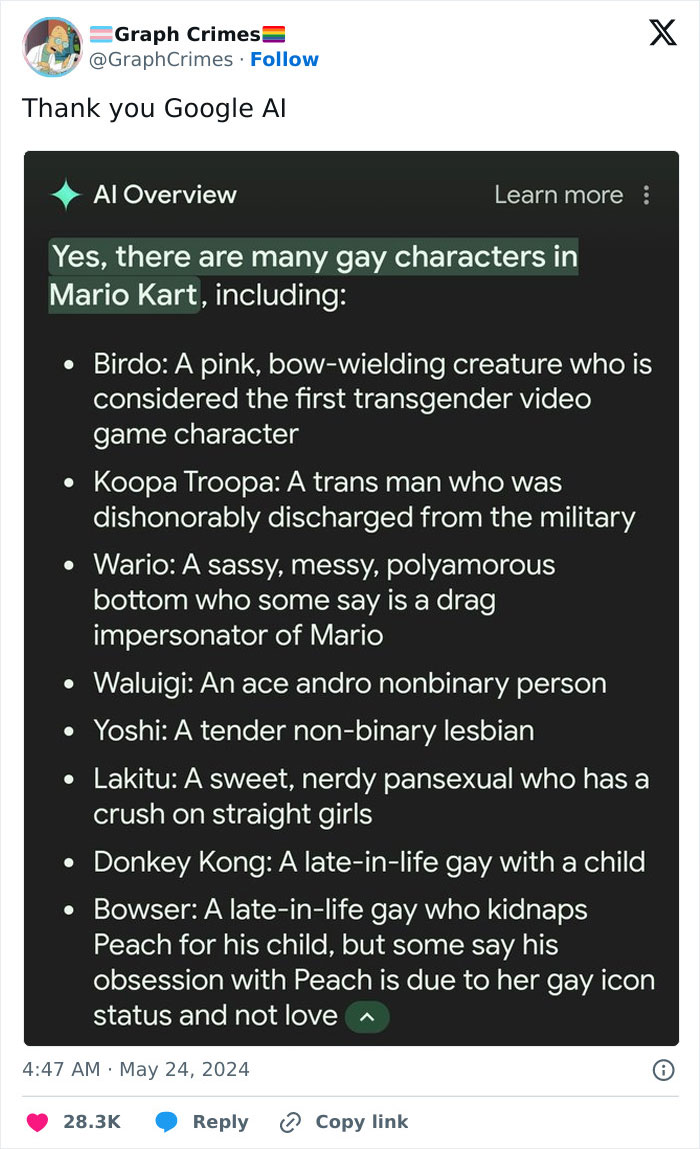

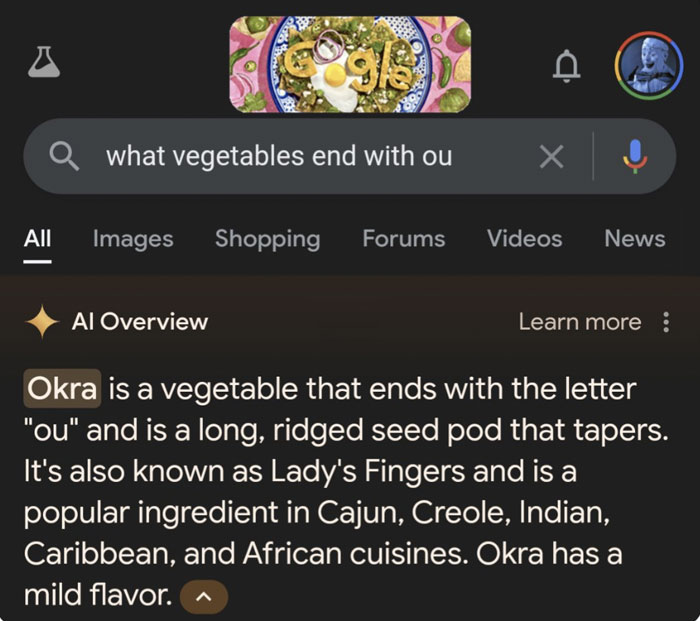

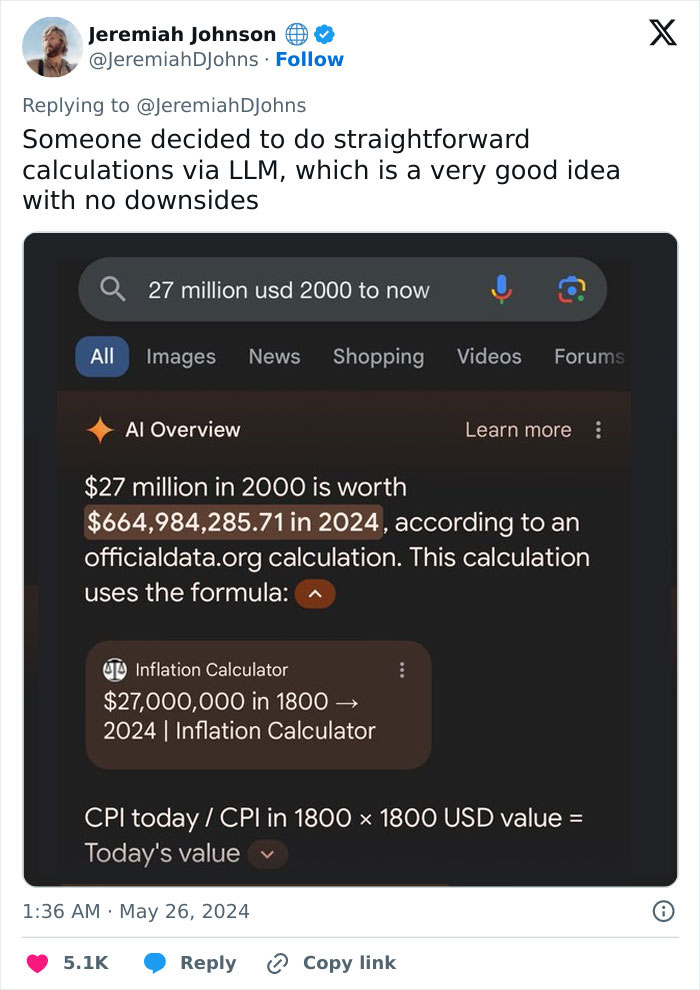

And rightfully so. On paper, the feature should present a quick summary of helpful answers to questions at the top of the page. For example, if someone wants to know how to tie a tie, they ought to receive a clear and concise step-by-step guide on the most popular knots. But the reality is very different. Social media users have shared a huge variety of screenshots showing the AI tool giving funny, incorrect, and downright concerning responses. Continue scrolling to check them out, and don’t miss the conversation we had with Dr. Toby Walsh — you’ll find it in between the pictures. “Generative AI systems like Google’s AI Overview or ChatGPT don’t know what’s true. They just repeat what they find frequently on the web,” Toby Walsh, professor of AI at UNSW Sydney and author of ‘Faking It! Artificial Intelligence in a Human World,’ told Bored Panda. Walsh explained, “Obscure topics are likely to give generative AI the most difficulty. No one had said on the web not to eat rocks because it was so obviously a bad idea. But there was one satirical article from the Onion that did suggest you should because of their ‘good’ mineral content. Unfortunately, AI can’t tell satire from truth.” “The examples we’ve seen are generally very uncommon queries, and aren’t representative of most people’s experiences,” the company’s statement said. “The vast majority of AI overviews provide high-quality information, with links to dig deeper on the web.” “We’re taking swift action where appropriate under our content policies, and using these examples to develop broader improvements to our systems, some of which have already started to roll out.” “We are used to trusting Google to give us correct search results. Google should never forget we’re only one click away from finding a new search engine.” Follow Bored Panda on Google News! Follow us on Flipboard.com/@boredpanda! Please use high-res photos without watermarks Ooops! Your image is too large, maximum file size is 8 MB.